Why Anthropic Burned the Books

The Claude maker had a good reason for its destructive scans

Anthropic was forced to scan its books in a destructive manner for two reasons, both of which are perfectly rational and neither of which are its fault.

On Monday, court documents revealed that AI company Anthropic spent millions of dollars physically scanning print books to build Claude, an AI assistant similar to ChatGPT. In the process, the company cut millions of print books from their bindings, scanned them into digital files, and threw away the originals solely for the purpose of training AI—details buried in a copyright ruling on fair use whose broader fair use implications we reported yesterday.

The 32-page legal decision tells the story of how, in February 2024, the company hired Tom Turvey, the former head of partnerships for the Google Books book-scanning project, and tasked him with obtaining "all the books in the world." The strategic hire appears to have been designed to replicate Google's legally successful book digitization approach—the same scanning operation that survived copyright challenges and established key fair use precedents.

While destructive scanning is a common practice among some book digitizing operations, Anthropic's approach was somewhat unusual due to its documented massive scale. By contrast, the Google Books project largely used a patented non-destructive camera process to scan millions of books borrowed from libraries and later returned. For Anthropic, the faster speed and lower cost of the destructive process appears to have trumped any need for preserving the physical books themselves, hinting at the need for a cheap and easy solution in a highly competitive industry.

Ultimately, Judge William Alsup ruled that this destructive scanning operation qualified as fair use—but only because Anthropic had legally purchased the books first, destroyed each print copy after scanning, and kept the digital files internally rather than distributing them. The judge compared the process to "conserv[ing] space" through format conversion and found it transformative. Had Anthropic stuck to this approach from the beginning, it might have achieved the first legally sanctioned case of AI fair use. Instead, the company's earlier piracy undermined its position.

First, Anthropic was only forced to purchase actual books due to the nonsensical copyright laws surrounding so-called “book piracy”. The concept is ridiculous, since there is no form of theft that leaves the original possessor with his original possession.

How is book piracy any different, in any way, shape, or form, than people utilizing ideas that have been articulated, in speech or written form, without compensating the originator? I coined the term “Sigma Male” in 2010, a term which has been utilized over 50 billion times by many different people since then. I hold the copyright on the phrase, which was asserted right on left sidebar of the blog on which it first appeared. Is every single use of that term, most of which have been uncredited and all of which have been uncompensated, therefore “piracy”?

Of course not! It’s fair use. Scanning a text for AI training is no different than reading a book for intellectual stimulation.

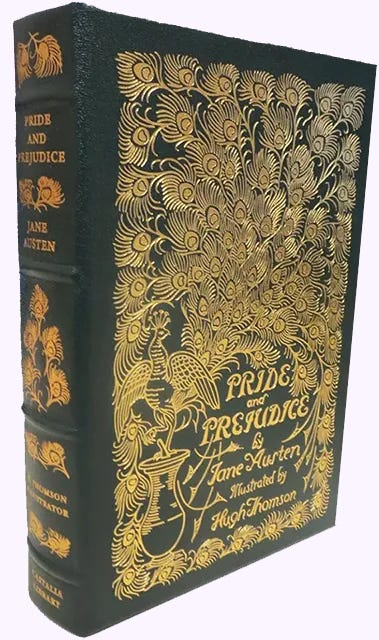

Second, a destructive scan is not only faster and less expensive, it is also much higher quality. The scanning resolution is 1200 dpi instead of 300 dpi, which allows for more precision in both optical character recognition and reprints. Castalia Library acquired and destroyed a rare first edition of Jane Austen’s Pride and Prejudice in order to create 1,100 more high-quality leatherbound editions; we have since done the same with one of the only 300 copies in existence of the Bibliography of Military Books up to 1642, published in 1900, as well as four other books that we are republishing.

Now, unlike Anthropic, Castalia Library is a book bindery, so we rebind the books that we scan and “destroy”, usually leaving them in much better shape than they were in when we acquired them. And the reality is that most of the scanned books were destined to be lost over time, so the scanning will preserve them, and at a higher level of quality than the archival efforts of Google and other organizations engaged in non-destructive scanning.

One merely hopes that Anthropic will make those higher-quality scans available to libraries and to the public, at least those scans of books that are in the public domain. In summary, there are many things about which the various AI companies can, and should, be criticized for, but destroying books for AI training is not one of them.

That would probably have been prohibitively expensive

Anthropic could have rebound the books and then auctioned them off for charity or other purposes.