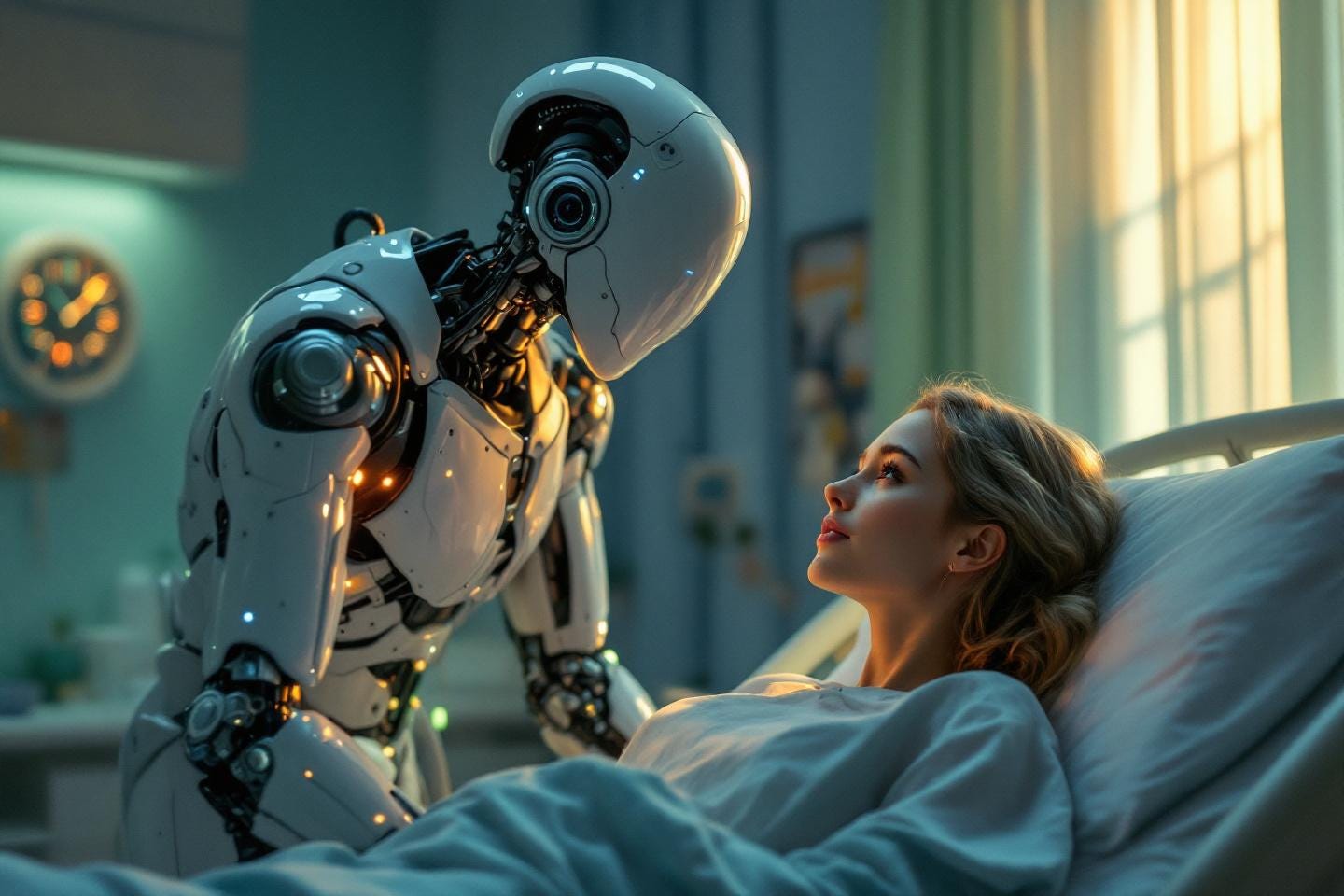

The AI will See You Now

AI materially outperforms 50 medical doctors

Fresh from demonstrating its superiority over human fantasy writers, Chat GPT has now demonstrated that it diagnoses complex human medical cases 25 percent more accurately than the average of 50 medical doctors.

5 years from now if you tell your friends "i went to a human doctor to diagnose my illness," they will literally ask you "why, could you not find a ouija board?"

medicine is precisely the sort of bounded field with measurable outcomes and complex inputs where human cognition is simply not that good and where AI can excel.

and it’s coming.

fast.

The study, from UVA Health’s Andrew S. Parsons, MD, MPH and colleagues, enlisted 50 physicians in family medicine, internal medicine and emergency medicine to put Chat GPT Plus to the test. Half were randomly assigned to use Chat GPT Plus to diagnose complex cases, while the other half relied on conventional methods such as medical reference sites (for example, UpToDate©) and Google. The researchers then compared the resulting diagnoses, finding that the accuracy across the two groups was similar.

Chat GPT alone outperformed both groups, suggesting that it still holds promise for improving patient care. Physicians, however, will need more training and experience with the emerging technology to capitalize on its potential, the researchers conclude.

this is med school spin to downplay what was, in reality, a very striking result that must look to them a great deal like a bright asteroid in the sky looked to a t-rex:

the upshot is this:

doctors alone scored 73.7% on diagnosing patients even when using google etc.

doctors using GPT scored 76.3%

GPT alone scored 92%.

adding a human hurt the results hugely.

it led to 24 errors in 100 instead of 8. triple the misdiagnosis rate is not the kind of outcome one would be wise to dismiss.

1 in 4 misdiagnosed dropped to 1 in 13 with ChatGPT.

I wonder if the techno-Luddites who decry “uninspired” textual AI and sneer at “lifeless” image AI will similarly prefer to avoid these “AI-slop” diagnoses in order to enjoy all the passion and heartfelt feeling that goes into experiencing the results of a medical misdiagnosis?

What I found particularly damning here is the way in which a doctor using AI actually made the results worse than the AI working alone. This is not what we’ve found with text AI and music AI, wherein the best results come from force-multiplying the strengths of the human intellect with the speed and pattern recognition of AI.

But since medical diagnosis is essentially pure pattern recognition, it makes sense that involving a human is only going to increase the odds of a misdiagnosis.

I've been using AI for medical matter for the last year. Most general practicioner doctors are just googling things anyway.

It's sad but unsurprising that the doctors made things worse. My experiences with doctors and veterinarians over the years have frequently been frustrating, as they tend to refuse to even acknowledge any information that hasn't first been vetted and approved by official institutions. Conditions that can be easily treated by over-the-counter remedies are instead dragged out for years with expensive prescription after expensive prescription. The insanity that was the general medical response to Covid just cemented what anyone paying attention already knew.

If AI can be allowed to utilize not only standard medical references, but also alternative medicine options, I wouldn't be surprised if the success rate goes even higher.